TechLeader Voices #4: Why Do AI Teams Struggle to Deliver Value Once They Scale Past 50 Engineers?

One of America’s top AI leaders on why cloud and AI management fundamentally differ, anchoring every AI project to business outcomes, risk-based frameworks, cloud-native MLOps PLUS Global News

“Every AI initiative must be anchored to a crystal-clear business goal... reducing customer churn by 50%, or cutting processing time by 60%. That’s how you separate real value from hype,” said Anil Prasad, one of the top 100 AI leaders in the US, having built platforms that have powered over $4B in outcomes for Fortune 100s and startups.

As an engineering executive managing teams of over 200 and leading transformations in healthcare, fintech, and more, he shares how to distinguish flashy demos from AI that delivers tangible business results at the ground level.

We’ll also see why leaders must move from project management to "transformational stewardship," as Prasad calls it.

He highlights how cloud and AI management work fundamentally differently. While cloud leaders are excellent project managers focused on timelines and technical integration, AI leaders must champion vision and foster cross-functional collaboration. The "biggest challenge I've seen is mostly the human side of it," he notes, when discussing AI transformation.

In this issue, Prasad lays out a pragmatic playbook, starting small with business-aligned pilots and scaling AI teams with a “compliance by design” focus and sustaining impact long after deployment. I hope you find his insights as actionable as I did.

— Devaang Jain

Editor, TechLeader Voices

In Today’s Issue:

Expert Insights: Prasad’s five-part framework to separate real AI value from hype; compliance by design; risk-based frameworks for compliance-first innovation; orchestrating people and processes at scale; and sustaining impact post-deployment.

Playbook of the Week: We’ve created a free downloadable strategic evaluation tool for leaders based on Prasad’s five-lens framework to evaluate and guide AI initiatives from idea to impact.

Around the World: 91% of production AI models degrade over time (MIT Study); OpenAI CEO Confirms GPT-5 Launch for Summer 2025; Using AI as a “copilot, not commander”: Rethinking AI in Corporate Security and Compliance.

AI Toolbox: Discover an AI tool that turns screen recordings into polished videos and step-by-step guides with voiceovers, smart zoom, and translation in 30+ languages.

Exclusive Invite: We interviewed senior leaders who've led successful AI implementations and distilled strategic insights into our new ECHO Reports. Buy any report you like at half price. Use the code TL50 at checkout.

Expert Insights

Inside a Top US AI Leader’s Playbook for Teams That Deliver Value

We interviewed Anil Prasad, Engineering Leader at Duke Energy. He’s led startups and Fortune 100s across healthcare, fintech, and life sciences. With over two decades of experience leading engineering teams of 200+ and building AI platforms that have driven more than $4B in business impact, Prasad brings a unique blend of technical depth and enterprise strategy.

Watch the full conversation here:

JP Morgan & Uber’s Model: Deployment and User Adoption (Not Demos)

Many tech leaders are inundated with impressive AI demos, but Prasad emphasizes discipline in evaluating true value. He starts with the business outcome, not the technology.

“Every AI initiative must be anchored to a crystal-clear business goal.”

— Anil Prasad

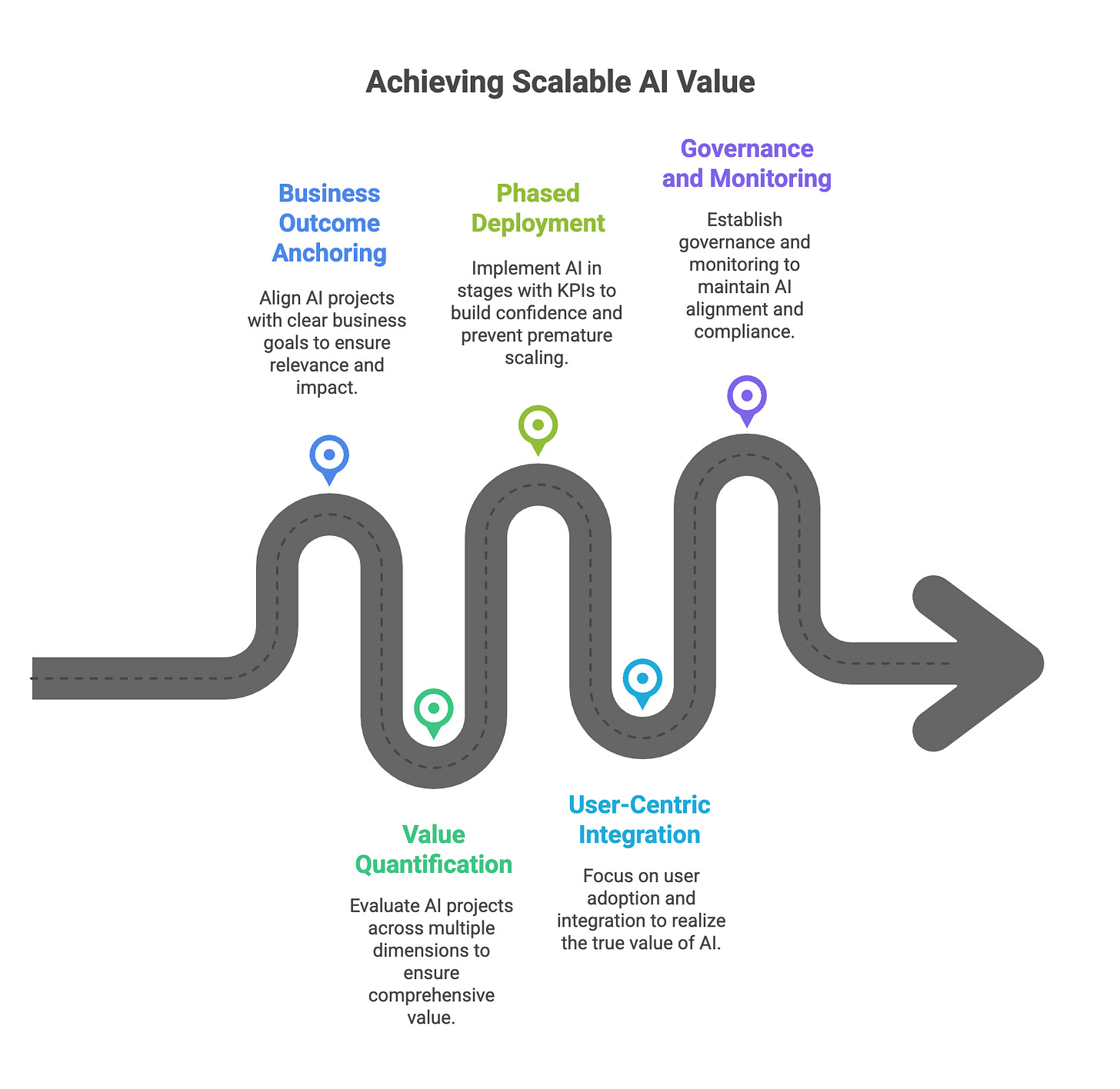

In practice, this means launching business-aligned pilots tied to concrete metrics like churn reduction or processing time cutdowns. Prasad also quantifies value across five dimensions –

Financial (revenue or cost impact)

Operational (efficiency and error reduction)

Strategic (competitive advantage)

Risk (compliance/fraud mitigation)

Innovation (new products or research)

This means CxOs must measure ROI beyond just the “cool factor” to quickly tell if an AI project is delivering or just dazzling.

Equally important is a rigorous phased approach: pilot with clear success criteria, prove the concept, then scale with defined KPIs at each phase. Prasad notes that real-world value only comes when AI is integrated into user workflows.

He cites how Uber built an accurate ML model but deemed it a success only after integrating it into drivers’ workflow, ensuring the fancy model actually changed day-to-day operations. User adoption, therefore, is a key part of value realization.

“The real value comes in when AI is integrated across core business systems.”

— Anil Prasad

He warns that too many AI pilots never “graduate” because they aren’t built with enterprise context or scaling in mind. From day one, design for integration, whether that means API-accessible models that plug into legacy systems, or ensuring your AI outputs fit the workflows of business users.

As another example, AI that parses contracts or scans medical images must slot into analysts’ or clinicians’ existing processes (e.g. by piping results into the tools they already use) to truly deliver. C-suite executives should insist on plans for last-mile integration, training, and change management as part of any AI proposal, not as afterthoughts.

Prasad shared one particularly illustrative win to illustrate his approach: JPMorgan’s pilot to automate commercial loan agreement reviews. The bank targeted a painfully costly process (thousands of lawyer hours on contract review) and applied AI to parse contracts and flag anomalies.

Because the use case addressed a clear business pain with measurable ROI, it gained strong executive sponsorship. The pilot’s success (instant time and cost savings) led to scaling the system firm-wide — the AI platform, dubbed COIN, now handles contract reviews in seconds, eliminating an estimated 360,000 hours of manual work.

Finally, use lean governance and monitoring to provide the guardrails: regular oversight, feedback loops, and iterative tuning prevent drift and keep solutions aligned with business goals. See the 5-Lens Value Filter below for Prasad’s detailed framework.

How to Orchestrate People & Processes at Scale for Real Business Impact

“Scaling [in AI] isn’t about hiring… it’s more about orchestrating diverse talent and building adaptable processes, ensuring everyone can work with AI tools.”

— Anil Prasad

How do you manage an AI organization once it grows past a dozen engineers? Prasad quickly learned from leading 200+ person AI teams that traditional linear scaling falls short.

“In an AI team it is slightly different [than traditional IT] because you need a blend of skill sets and a broad set of stakeholders engaged”

— Anil Prasad

This is also, in fact, one of the biggest pitfalls he’s seen. Treating AI like a typical IT rollout: instead of investing in human-in-the-loop processes, knowledge sharing, and agile experimentation. At scale, the glue is culture: a shared understanding that AI success means people, processes, and technology evolving in unison.

I like to think of AI (and, in general, tech) culture-building this way: AI teams must indulge in collecting their own intricate corporate lore to foster a real, breathing culture.

Also, in an AI context, adding more people doesn’t automatically mean more output; instead, value comes from orchestration. Prasad structures large teams with cross-functional pods blending data scientists, ML engineers, software developers, domain experts, product managers, and even QA. This diversity ensures that all the skills for AI productization (from data pipeline to UX) are in the room.

Prasad also draws a striking contrast between cloud and AI transformations in the enterprise. Traditional cloud projects are often linear and technical; migrate X to cloud, cut infrastructure cost by Y: usually handled with project plans, budgets, and timelines.

AI initiatives, by contrast, “are much more cultural in nature… not about deploying new tools, [but] about thinking how people, process and technology integrate and create an impact,” Prasad explains.

Importantly, scaling AI is as much a cultural journey as a technical one: “AI changes day-to-day functions and even job roles,” Prasad says, so managing the human side of change is pivotal.

In our conversation, he also repeatedly emphasized a culture of continuous upskilling (something that almost always comes up in my conversations with tech leaders). AI is a fast-moving target, so teams must constantly learn new tools and methods together. Leaders need to shift from pure project managers to what Prasad calls “transformational stewards,” who champion vision and foster collaboration across silos.

Use Risk-Based Frameworks for Responsible AI in Regulated Environments

When deploying AI in healthcare, finance, or other regulated sectors, Prasad's mantra is “compliance by design.” He recounts tough decisions where using real patient data could boost model accuracy but violate HIPAA.

“Every AI use case needs a risk level or risk score. High-risk areas, say diagnostic tools — trigger the strictest controls (EU AI Act, FDA guidelines). Lower-risk applications like a scheduling bot can have lighter compliance. So, start with a risk-based framework.”

— Anil Prasad

His solution is a risk-based framework: classify each AI use case by risk level (e.g. a clinical decision support algorithm = high risk, an internal workflow helper = low risk) and calibrate controls accordingly. High-risk projects demand built-in bias checks, rigorous validation, and likely regulator engagement, whereas low-risk ones might proceed with standard safeguards.

Prasad also advocates a hybrid model strategy in sensitive domains. “Use pre-built LLMs for generic, non-sensitive tasks like summarization,” he says, “but when dealing with PHI or regulated data, opt for tightly controlled proprietary models.”

In other words, don’t subject your crown-jewel data to a black-box model that leaks information. Data governance must be front and center: think multi-tenancy architectures that segregate data by user or role, automated compliance checks on inputs and outputs (one project even flagged unapproved prompts in real-time), and enforcing least-privilege data access for AI systems.

Prasad mentions setting up Business Associate Agreements (BAAs) with vendors and internal stakeholders as a must-do before integrating AI solutions in healthcare. The upshot: be agile in compliance. Rather than viewing governance as a drag, bake it into every sprint.

“Continuous compliance as part of the design, rather than doing compliance at the end… that’s another important factor that differentiates a highly compliant solution.”

— Anil Prasad

Treat compliance like any other requirement; something to iterate on continuously, not an afterthought. The payoff is innovation that can actually see the light of day in strict environments.

How to Sustain “Demo-Day” Performance Post-Deployment

“AI systems require constant tuning, retraining, and human-in-the-loop feedback… unlike cloud, which you can ‘set and forget’, AI needs continuous oversight post-deployment.”

— Anil Prasad

One of Prasad’s core messages in our interview: AI and cloud fundamentally differ on many levels and deploying the AI model is only the beginning. Production AI will “age”; models drift as real-world data shifts, often in unpredictable ways.

Prasad has seen models that performed well initially stray from expectations months later due to subtle data pattern changes. The antidote is a strong MLOps regimen: implement continuous training pipelines (CI/CD, and Prasad adds a third he fondly calls "Continuous Training” for AI models), so models are regularly retrained on fresh data.

I recently came across an MIT study that notes 91% of models degrade over time, so ignoring retraining is asking for failure. It’s crucial to monitor live model performance for accuracy drops, bias, and data drift indicators.

Prasad here again highlights the importance of human oversight even in automated pipelines. He likens it to code review: when an ML engineer wants to update a model or data, have a review process to sanity-check it. This “human-in-the-loop governance” catches issues that automated metrics might miss and ensures that changes align with business intent.

Every user interaction becomes a feedback signal.

“Every user interaction is an opportunity to improve your model. Feedback should be logged, analyzed, and used to retrain or fine-tune models.”

— Anil Prasad

By actively closing the loop (collecting user feedback, error reports, drift metrics), the AI can continuously improve rather than surprise you in production.

Enterprises need to treat AI systems as living products that require maintenance, monitoring, and improvement throughout their lifecycle. With the right MLOps and human-centered processes, we can sustain that “demo day” performance for the long haul.

The 5-Lens Value Filter for AI at Scale

Anil Prasad uses a five-lens framework to evaluate and guide AI initiatives from idea to impact. Download the value filter here.

TL Question of the Week

Around the World

AI Models Degrading Faster in the Wild (MIT Study): New research finds 91% of production AI models degrade over time without retraining, underscoring the need for ongoing MLOps and model monitoring. “AI aging” is real; even high-performing models can decay silently as data shifts. Regular tune-ups are becoming non-negotiable for sustained AI performance.

FDA Deploys New AI System for Compliance (“INTACT”): The U.S. FDA launched an AI platform nicknamed INTACT (Intelligent Network for Transformative Analytics & Compliance Technology) to accelerate drug approvals and enhance safety monitoring. This agency-wide tool ingests mountains of real-world data to flag risks in near real-time, essentially embedding AI into regulatory workflows. It’s a prime example of “compliance by design”: high-impact use cases (like clinical review) get strict oversight and continuous monitoring. The FDA’s move, ahead of schedule and under budget, shows that even highly regulated environments can innovate with AI under a strong risk-based framework, and validates Prasad’s advice that built-in governance and risk calibration enable AI innovation that actually sees the light of day in strict sectors.

Automation ≠ Autopilot — Rethinking AI in Corporate Security and Compliance: Ray Lambert, Security Engineer at Drata emphasizes AI in corporate security should be a "copilot, not a commander." He highlights the need for human oversight in approval workflows, ongoing validation of AI models, and auditable controls for any AI-driven action impacting compliance, privacy, or trust-sensitive systems. This reinforces the idea of building guardrails from the start, particularly in regulated environments.

OpenAI CEO Confirms GPT-5 Launch for Summer 2025: Sam Altman announced that GPT-5, OpenAI’s next-gen model, is expected to roll out this summer. Early testers report it’s “materially better” than GPT-4, promising big leaps in reasoning, memory and adaptability. This suggests AI capabilities will continue evolving rapidly as a service, reinforcing the need for continuous updates and integration rather than one-off demos. Leaders should prepare for iterative improvements (much as Prasad emphasizes continuous training/monitoring) and ensure each upgrade is leveraged toward clear business outcomes, not just hype.

AI Toolbox

Making product videos or quick tutorials can be time consuming. Clueso is an AI tool that transforms screen recordings into professional videos and step-by-step documentation. Its features include AI-generated voiceovers, smart zoom effects, and one-click translation into over 30 languages, making it a valuable tool for organizations aiming to enhance their customer education and internal training processes.

Use Clueso (scroll down to the bottom for the link) to rapidly prototype polished training materials and product walkthroughs.

ECHO Early Signals: From Pilot to Production in Clinical Trials

How can a company actually beat the odds and take an AI pilot all the way to scaled success? A recent TechLeader ECHO Report featuring Techdome, a life sciences firm, offers a compelling example that echoes many of Anil Prasad’s prescriptions.

Techdome implemented a custom AI solution to improve clinical trial operations. The outcome: 20% higher patient retention, 95% fewer data errors, and trials completed 25% faster than before. These are huge improvements in an industry where delays and dropouts can cost millions.

They achieved this by combining custom LLMs with traditional ML and human-in-the-loop processes. Their AI system automated many tedious aspects of running clinical trials: matching patients to trials, sending personalized follow-ups, validating data entries – but with multiple layers of oversight.

Clinicians and patients were involved in refining the AI (through feedback loops and fine-tuning on domain data), and there were multi-layer checkpoints for compliance and errors (e.g. the AI’s recommendations came with confidence scores and required human sign-off in critical cases).

In short, they built the AI with the end-users and regulators in mind from the start. The Techdome case serves to remind us about the principles we discussed: business outcome focus (increase trial success), phased execution, data and compliance rigor, and keeping humans in the loop.

Do you have an AI implementation you’d like to see featured – either from your own work or from a peer? Or would you like to share your insights in an exclusive feature with us? Get in touch by replying to this issue directly, or by writing to team@techleader.ai.

Forward-worthy Insight

“AI isn’t a tech project. It’s a cultural transformation — of people, process, and vision.”

— Anil Prasad

Until Next Time

We hope this issue helped you see through the lens of an industry insider.

Put in your comments to let us know how you found it, and what you’d like to see more of in the future.

We'll return next week with full signal, no noise. See you in the next issue.

Where to find Anil Prasad:

https://www.linkedin.com/in/anilsprasad/

MIT Study in AI Aging:

https://www.nature.com/articles/s41598-022-15245-z

FDA’s INTACT AI System:

Ray Lambert’s Article on AI in Corporate Security and Compliance:

https://thehackernews.com/expert-insights/2025/07/automation-autopilot-rethinking-ai-in.html